You made it! It is the last practical of the Applied Text Mining course.

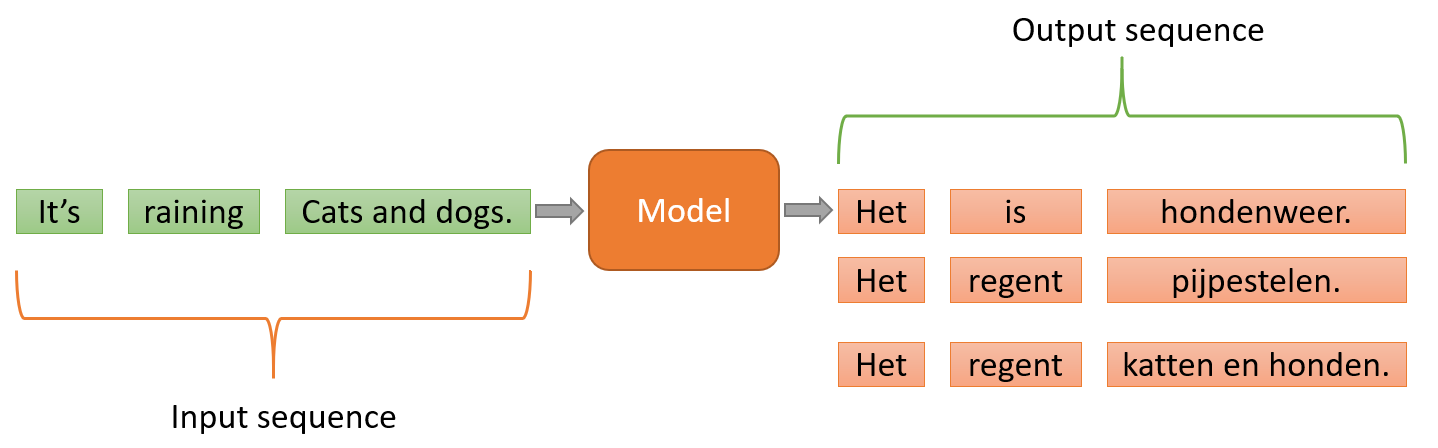

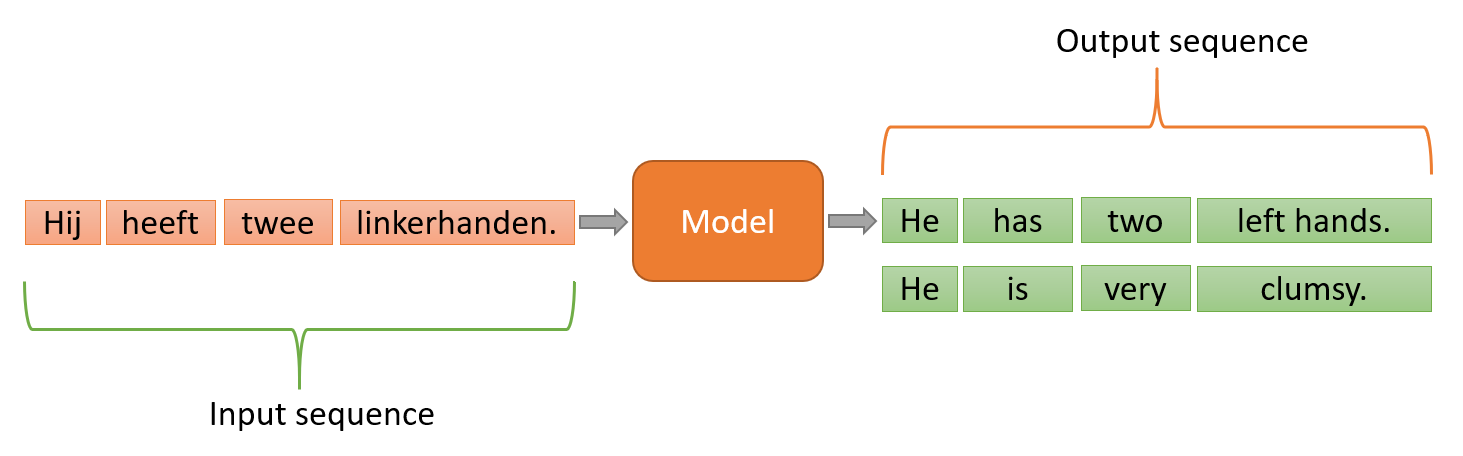

In this practical, we will create models for neural machine translation. Today we are curious to see how a simple deep learning-based model translates a sentence into its counterpart. See these examples:

The aim of this practical is to convert a Dutch sentence to its English counterpart using a Neural Machine Translation (NMT) system. We will implement this task by building a simple Sequence-to-Sequence model (a special class of Recurrent Neural Network architectures) with the help of Keras library.

Today we will use the following libraries. Take care to have them installed!

import string

import re

import statistics

from numpy import array, argmax, random, take

import pandas as pd

from keras.models import Sequential

from keras.layers import Dense, LSTM, Embedding, Bidirectional, RepeatVector, TimeDistributed

from keras.preprocessing.text import Tokenizer

from keras.callbacks import ModelCheckpoint

from keras.preprocessing.sequence import pad_sequences

from keras.models import load_model

from keras import optimizers

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

# matplotlib inline

pd.set_option('display.max_colwidth', 200)

1. In this practical we will use a data set of tab-delimited Bilingual Sentence Pairs from http://www.manythings.org/anki/. Use the following two functions (read_text and to_lines) and read the nld.txt data set (also provided in the course webpage next to the practial link). This data set contains phrases in Dutch with their translation in English. Convert the text sequences to an array and check the first items in your array.

# function to read raw text file

def read_text(filename):

# open the file

file = open(filename, mode='rt', encoding='utf-8')

# read all text

text = file.read()

file.close()

return text

# split a text into sentences

def to_lines(text):

sents = text.strip().split('\n')

sents = [i.split('\t') for i in sents]

return sents

data = read_text("nld-eng/nld.txt")

nld_eng = to_lines(data)

nld_eng = array(nld_eng)

nld_eng.shape

(54972, 3)

nld_eng

array([['Go.', 'Lopen!',

'CC-BY 2.0 (France) Attribution: tatoeba.org #2877272 (CM) & #7764436 (LinguisticFusion)'],

['Go.', 'Vooruit.',

'CC-BY 2.0 (France) Attribution: tatoeba.org #2877272 (CM) & #7915821 (Elsofie)'],

['Hi.', 'Hoi.',

'CC-BY 2.0 (France) Attribution: tatoeba.org #538123 (CM) & #537889 (Dorenda)'],

...,

['Always use distilled water in steam irons because using ordinary water will cause a mineral build-up over time that will clog the steam holes.',

'Gebruik altijd gedistilleerd water in stoomstrijkijzers, want gewoon water zorgt voor mineraalophoping dat de stoomgaatjes na verloop van tijd verstopt.',

'CC-BY 2.0 (France) Attribution: tatoeba.org #3020388 (Delian) & #3037091 (Citrine)'],

["If you translate from your second language into your own native language, rather than the other way around, you're less likely to make mistakes.",

'Als je vanuit je tweede taal naar je eigen moedertaal vertaalt, in plaats van andersom, maak je minder snel fouten.',

'CC-BY 2.0 (France) Attribution: tatoeba.org #1230823 (CK) & #8627687 (MarijnKp)'],

["If someone who doesn't know your background says that you sound like a native speaker, it means they probably noticed something about your speaking that made them realize you weren't a native speaker. In other words, you don't really sound like a native speaker.",

'Als iemand die je achtergrond niet kent zegt dat je klinkt als een moedertaalspreker betekent dat dat diegene waarschijnlijk iets in je spreken opgemerkt heeft dat hem deed realiseren dat je geen moedertaalspreker bent. Met andere woorden, je klinkt niet echt als een moedertaalspreker.',

'CC-BY 2.0 (France) Attribution: tatoeba.org #953936 (CK) & #1056762 (ReneeMona)']],

dtype='<U286')

Using a code like nld_eng = nld_eng[:1000,:], you can use a subset of data, for example the first 1000 sentence pairs, to reduce the training time of the model. Be aware that you will lose the performance.

2. Use the maketrans() function to remove punctuations from the nld_enp object. The maketrans() function is a function from the library str that is used to construct a transition table, i.e that it specifies a list of characters that need to be replaced in a string or the characters that need to be deleted from the string. To use this transition table, you can use the translate() function and apply that on a string. It is also possible to use these functions to remove the punctuations. Similar to the example below, apply the maketrans() function to remove punctuations from the nld_eng array.

### Here you see an example on how to use the maketrans() function ###

# specify the list of characters that need to be replaced

str1 = "mtex"

# specify the list of characters with which the characters need to be replaced

str2 = "dwoo"

# specify the list of characters that needs to be deleted

str3 = "u"

# target string

temp_str = "text mining"

# using maketrans() to construct a translate table

table = temp_str.maketrans(str1, str2, str3)

# Printing original string

print ("The string before translating is : ", end ="")

print (temp_str)

# using translate() to make translations.

print ("The string after translating is : ", end ="")

print (temp_str.translate(table))

The string before translating is : text mining The string after translating is : woow dining

Note that it is possible to replace one character with two or more. You need to supply a dict as argument to maketrans().

nld_eng[:,0] = [s.translate(str.maketrans('', '', string.punctuation)) for s in nld_eng[:,0]]

nld_eng[:,1] = [s.translate(str.maketrans('', '', string.punctuation)) for s in nld_eng[:,1]]

nld_eng

array([['Go', 'Lopen',

'CC-BY 2.0 (France) Attribution: tatoeba.org #2877272 (CM) & #7764436 (LinguisticFusion)'],

['Go', 'Vooruit',

'CC-BY 2.0 (France) Attribution: tatoeba.org #2877272 (CM) & #7915821 (Elsofie)'],

['Hi', 'Hoi',

'CC-BY 2.0 (France) Attribution: tatoeba.org #538123 (CM) & #537889 (Dorenda)'],

...,

['Always use distilled water in steam irons because using ordinary water will cause a mineral buildup over time that will clog the steam holes',

'Gebruik altijd gedistilleerd water in stoomstrijkijzers want gewoon water zorgt voor mineraalophoping dat de stoomgaatjes na verloop van tijd verstopt',

'CC-BY 2.0 (France) Attribution: tatoeba.org #3020388 (Delian) & #3037091 (Citrine)'],

['If you translate from your second language into your own native language rather than the other way around youre less likely to make mistakes',

'Als je vanuit je tweede taal naar je eigen moedertaal vertaalt in plaats van andersom maak je minder snel fouten',

'CC-BY 2.0 (France) Attribution: tatoeba.org #1230823 (CK) & #8627687 (MarijnKp)'],

['If someone who doesnt know your background says that you sound like a native speaker it means they probably noticed something about your speaking that made them realize you werent a native speaker In other words you dont really sound like a native speaker',

'Als iemand die je achtergrond niet kent zegt dat je klinkt als een moedertaalspreker betekent dat dat diegene waarschijnlijk iets in je spreken opgemerkt heeft dat hem deed realiseren dat je geen moedertaalspreker bent Met andere woorden je klinkt niet echt als een moedertaalspreker',

'CC-BY 2.0 (France) Attribution: tatoeba.org #953936 (CK) & #1056762 (ReneeMona)']],

dtype='<U286')

3. Convert all words into their lowercase.

# convert to lowercase

for i in range(len(nld_eng)):

nld_eng[i,0] = nld_eng[i,0].lower()

nld_eng[i,1] = nld_eng[i,1].lower()

nld_eng

array([['go', 'lopen',

'CC-BY 2.0 (France) Attribution: tatoeba.org #2877272 (CM) & #7764436 (LinguisticFusion)'],

['go', 'vooruit',

'CC-BY 2.0 (France) Attribution: tatoeba.org #2877272 (CM) & #7915821 (Elsofie)'],

['hi', 'hoi',

'CC-BY 2.0 (France) Attribution: tatoeba.org #538123 (CM) & #537889 (Dorenda)'],

...,

['always use distilled water in steam irons because using ordinary water will cause a mineral buildup over time that will clog the steam holes',

'gebruik altijd gedistilleerd water in stoomstrijkijzers want gewoon water zorgt voor mineraalophoping dat de stoomgaatjes na verloop van tijd verstopt',

'CC-BY 2.0 (France) Attribution: tatoeba.org #3020388 (Delian) & #3037091 (Citrine)'],

['if you translate from your second language into your own native language rather than the other way around youre less likely to make mistakes',

'als je vanuit je tweede taal naar je eigen moedertaal vertaalt in plaats van andersom maak je minder snel fouten',

'CC-BY 2.0 (France) Attribution: tatoeba.org #1230823 (CK) & #8627687 (MarijnKp)'],

['if someone who doesnt know your background says that you sound like a native speaker it means they probably noticed something about your speaking that made them realize you werent a native speaker in other words you dont really sound like a native speaker',

'als iemand die je achtergrond niet kent zegt dat je klinkt als een moedertaalspreker betekent dat dat diegene waarschijnlijk iets in je spreken opgemerkt heeft dat hem deed realiseren dat je geen moedertaalspreker bent met andere woorden je klinkt niet echt als een moedertaalspreker',

'CC-BY 2.0 (France) Attribution: tatoeba.org #953936 (CK) & #1056762 (ReneeMona)']],

dtype='<U286')

4. What is the maximum length of a sentence in each of the Dutch and English sets? What about the average length?

# empty lists

eng_l = []

nld_l = []

# populate the lists with sentence lengths

for i in nld_eng[:,0]:

eng_l.append(len(i.split()))

for i in nld_eng[:,1]:

nld_l.append(len(i.split()))

length_df = pd.DataFrame({'eng':eng_l, 'nld':nld_l})

length_df.hist(bins = 30)

plt.show()

max(eng_l)

44

max(nld_l)

44

type(nld_l)

list

statistics.mean(nld_l)

5.853307138179437

statistics.mean(eng_l)

5.619569962890199

Coincidentally the maximum length of the Dutch sentences and that of the English phrases is equal to 44.

5. Use the train_test_split function from sklearn to split the data set into training (80%) and test (20%) sets.

train, test = train_test_split(nld_eng, test_size=0.2, random_state=321)

6. Time to tokenize the sentences. Use the Tokenizer function from Keras and fit the sentences. Find out about the vocabulary size for the Dutch and English sets.

# prepare english tokenizer

eng_tokenizer = Tokenizer()

eng_tokenizer.fit_on_texts(nld_eng[:, 0])

eng_vocab_size = len(eng_tokenizer.word_index) + 1

print('English Vocabulary Size: %d' % eng_vocab_size)

English Vocabulary Size: 9073

# prepare Dutch tokenizer

nld_tokenizer = Tokenizer()

nld_tokenizer.fit_on_texts(nld_eng[:, 1])

nld_vocab_size = len(nld_tokenizer.word_index) + 1

print('Dutch Vocabulary Size: %d' % nld_vocab_size)

# print('Dutch Vocabulary Size:', nld_vocab_size)

Dutch Vocabulary Size: 12794

7. Write a function to convert tokens into sequences using an argument for maximum sentence length. Other input arguments to this function are tokenizer and sentences, and its output will be sequences of tokens.

# encode and pad sequences

def encode_sequences(tokenizer, maximum_length, sentences):

# integer encode sequences

seq = tokenizer.texts_to_sequences(sentences)

# pad sequences with 0 values

seq = pad_sequences(seq, maxlen=maximum_length, padding='post')

return seq

8. Convert your tokenized training data into sequences. Use a maximum length of 20 and name the dataframes train_X and train_Y.

eng_length = 40

nld_length = 40

# prepare training data

train_X = encode_sequences(nld_tokenizer, nld_length, train[:, 1])

train_Y = encode_sequences(eng_tokenizer, eng_length, train[:, 0])

9. In the same way, convert your tokenized test data into sequences and name the dataframs test_X and test_Y.

# prepare test data

test_X = encode_sequences(nld_tokenizer, nld_length, test[:, 1])

test_Y = encode_sequences(eng_tokenizer, eng_length, test[:, 0])

10. Define a Sequence-to-Sequence (Seq2Seq) model architecture using an embedding layer as input layer, an LSTM layer as encoder and another LSTM layer followed by a Dense layer as decoder. Make this a function and name it build_model(). Define different input arguments for your function including the embedding_size and the number of LSTM units.

# build NMT model

def build_model(in_vocab, out_vocab, in_timesteps, out_timesteps, embedding_size, LSTMunits):

model = Sequential()

model.add(Embedding(in_vocab, embedding_size, input_length=in_timesteps, mask_zero=True))

model.add(LSTM(LSTMunits))

model.add(RepeatVector(out_timesteps))

model.add(LSTM(LSTMunits, return_sequences=True))

model.add(Dense(out_vocab, activation='softmax'))

return model

11. Create a model by calling the function with embedding_size of 300 and 512 units for the LSTM layers.

model = build_model(nld_vocab_size,

eng_vocab_size,

nld_length,

eng_length,

300,

512)

12. Compile the model with the RMSprop optimizer and sparse_categorical_crossentropy for loss.

model.compile(optimizer=optimizers.RMSprop(lr=0.001),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.summary()

Model: "sequential" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= embedding (Embedding) (None, 40, 300) 3838200 _________________________________________________________________ lstm (LSTM) (None, 512) 1665024 _________________________________________________________________ repeat_vector (RepeatVector) (None, 40, 512) 0 _________________________________________________________________ lstm_1 (LSTM) (None, 40, 512) 2099200 _________________________________________________________________ dense (Dense) (None, 40, 9073) 4654449 ================================================================= Total params: 12,256,873 Trainable params: 12,256,873 Non-trainable params: 0 _________________________________________________________________

Note that we have used 'sparse_categorical_crossentropy' as the loss function as it allows us to use the target sequence as it is instead of one-hot encoded format. One-hot encoding the target sequences with such a huge vocabulary might consume your system's entire memory.

13. Fit the model with your desired number of epochs (e.g. 1 :)), validation_split of 0.2, and batch_size of 128. You can use smaller values for the number of LSTM units (100) and embedding size (50) if it takes a lot of time to run.

# history = model.fit(train_X, train_Y.reshape(train_Y.shape[0], train_Y.shape[1], 1),

# epochs=2,

# batch_size=128,

# validation_split = 0.2,

# verbose=1)

With ModelCheckpoint you can save your best model during the training. We used this option of Keras to run our neural translator for 15 epochs and save the model for your use.

filename = 'model.15Epochs'

checkpoint = ModelCheckpoint(filename,

monitor='val_loss',

verbose=1,

save_best_only=True,

mode='min')

history = model.fit(train_X, train_Y.reshape(train_Y.shape[0], train_Y.shape[1], 1),

epochs=15,

batch_size=128,

validation_split = 0.2,

callbacks=[checkpoint],

verbose=1)

Epoch 1/15 275/275 [==============================] - ETA: 0s - loss: 1.0358 - accuracy: 0.8638 Epoch 00001: val_loss improved from inf to 0.89008, saving model to model.15Epochs WARNING:tensorflow:From c:\programdata\miniconda3\lib\site-packages\tensorflow\python\ops\resource_variable_ops.py:1817: calling BaseResourceVariable.__init__ (from tensorflow.python.ops.resource_variable_ops) with constraint is deprecated and will be removed in a future version. Instructions for updating: If using Keras pass *_constraint arguments to layers. INFO:tensorflow:Assets written to: model.15Epochs\assets 275/275 [==============================] - 662s 2s/step - loss: 1.0358 - accuracy: 0.8638 - val_loss: 0.8901 - val_accuracy: 0.8688 Epoch 2/15 275/275 [==============================] - ETA: 0s - loss: 0.8327 - accuracy: 0.8756 Epoch 00002: val_loss improved from 0.89008 to 0.79787, saving model to model.15Epochs INFO:tensorflow:Assets written to: model.15Epochs\assets 275/275 [==============================] - 691s 3s/step - loss: 0.8327 - accuracy: 0.8756 - val_loss: 0.7979 - val_accuracy: 0.8799 Epoch 3/15 275/275 [==============================] - ETA: 0s - loss: 0.7589 - accuracy: 0.8841 Epoch 00003: val_loss improved from 0.79787 to 0.75407, saving model to model.15Epochs INFO:tensorflow:Assets written to: model.15Epochs\assets 275/275 [==============================] - 708s 3s/step - loss: 0.7589 - accuracy: 0.8841 - val_loss: 0.7541 - val_accuracy: 0.8871 Epoch 4/15 275/275 [==============================] - ETA: 0s - loss: 0.6980 - accuracy: 0.8914 Epoch 00004: val_loss improved from 0.75407 to 0.69438, saving model to model.15Epochs INFO:tensorflow:Assets written to: model.15Epochs\assets 275/275 [==============================] - 769s 3s/step - loss: 0.6980 - accuracy: 0.8914 - val_loss: 0.6944 - val_accuracy: 0.8930 Epoch 5/15 275/275 [==============================] - ETA: 0s - loss: 0.6487 - accuracy: 0.8966 Epoch 00005: val_loss improved from 0.69438 to 0.66017, saving model to model.15Epochs INFO:tensorflow:Assets written to: model.15Epochs\assets 275/275 [==============================] - 789s 3s/step - loss: 0.6487 - accuracy: 0.8966 - val_loss: 0.6602 - val_accuracy: 0.8972 Epoch 6/15 275/275 [==============================] - ETA: 0s - loss: 0.6044 - accuracy: 0.9013 Epoch 00006: val_loss improved from 0.66017 to 0.62693, saving model to model.15Epochs INFO:tensorflow:Assets written to: model.15Epochs\assets 275/275 [==============================] - 776s 3s/step - loss: 0.6044 - accuracy: 0.9013 - val_loss: 0.6269 - val_accuracy: 0.9006 Epoch 7/15 275/275 [==============================] - ETA: 0s - loss: 0.5637 - accuracy: 0.9053 Epoch 00007: val_loss improved from 0.62693 to 0.60318, saving model to model.15Epochs INFO:tensorflow:Assets written to: model.15Epochs\assets 275/275 [==============================] - 770s 3s/step - loss: 0.5637 - accuracy: 0.9053 - val_loss: 0.6032 - val_accuracy: 0.9033 Epoch 8/15 275/275 [==============================] - ETA: 0s - loss: 0.5271 - accuracy: 0.9090 Epoch 00008: val_loss improved from 0.60318 to 0.58197, saving model to model.15Epochs INFO:tensorflow:Assets written to: model.15Epochs\assets 275/275 [==============================] - 765s 3s/step - loss: 0.5271 - accuracy: 0.9090 - val_loss: 0.5820 - val_accuracy: 0.9054 Epoch 9/15 275/275 [==============================] - ETA: 0s - loss: 0.4937 - accuracy: 0.9128 Epoch 00009: val_loss improved from 0.58197 to 0.56812, saving model to model.15Epochs INFO:tensorflow:Assets written to: model.15Epochs\assets 275/275 [==============================] - 1005s 4s/step - loss: 0.4937 - accuracy: 0.9128 - val_loss: 0.5681 - val_accuracy: 0.9074 Epoch 10/15 275/275 [==============================] - ETA: 0s - loss: 0.4634 - accuracy: 0.9163 Epoch 00010: val_loss improved from 0.56812 to 0.55173, saving model to model.15Epochs INFO:tensorflow:Assets written to: model.15Epochs\assets 275/275 [==============================] - 1010s 4s/step - loss: 0.4634 - accuracy: 0.9163 - val_loss: 0.5517 - val_accuracy: 0.9094 Epoch 11/15 275/275 [==============================] - ETA: 0s - loss: 0.4352 - accuracy: 0.9195 Epoch 00011: val_loss improved from 0.55173 to 0.53879, saving model to model.15Epochs INFO:tensorflow:Assets written to: model.15Epochs\assets 275/275 [==============================] - 1046s 4s/step - loss: 0.4352 - accuracy: 0.9195 - val_loss: 0.5388 - val_accuracy: 0.9103 Epoch 12/15 275/275 [==============================] - ETA: 0s - loss: 0.4089 - accuracy: 0.9229 Epoch 00012: val_loss improved from 0.53879 to 0.53323, saving model to model.15Epochs INFO:tensorflow:Assets written to: model.15Epochs\assets 275/275 [==============================] - 1036s 4s/step - loss: 0.4089 - accuracy: 0.9229 - val_loss: 0.5332 - val_accuracy: 0.9116 Epoch 13/15 275/275 [==============================] - ETA: 0s - loss: 0.3835 - accuracy: 0.9264 Epoch 00013: val_loss improved from 0.53323 to 0.52817, saving model to model.15Epochs INFO:tensorflow:Assets written to: model.15Epochs\assets 275/275 [==============================] - 1049s 4s/step - loss: 0.3835 - accuracy: 0.9264 - val_loss: 0.5282 - val_accuracy: 0.9121 Epoch 14/15 275/275 [==============================] - ETA: 0s - loss: 0.3601 - accuracy: 0.9299 Epoch 00014: val_loss improved from 0.52817 to 0.52340, saving model to model.15Epochs INFO:tensorflow:Assets written to: model.15Epochs\assets 275/275 [==============================] - 1055s 4s/step - loss: 0.3601 - accuracy: 0.9299 - val_loss: 0.5234 - val_accuracy: 0.9136 Epoch 15/15 275/275 [==============================] - ETA: 0s - loss: 0.3382 - accuracy: 0.9330 Epoch 00015: val_loss improved from 0.52340 to 0.52015, saving model to model.15Epochs INFO:tensorflow:Assets written to: model.15Epochs\assets 275/275 [==============================] - 1095s 4s/step - loss: 0.3382 - accuracy: 0.9330 - val_loss: 0.5201 - val_accuracy: 0.9139

14. Plot the accuracy and loss of your model for the training and validations sets.

We will use the plot_history function from the previous practical:

plt.style.use('ggplot')

def plot_history(history, val=0):

acc = history.history['accuracy']

if val == 1:

val_acc = history.history['val_accuracy'] # we can add a validation set in our fit function with nn

loss = history.history['loss']

if val == 1:

val_loss = history.history['val_loss']

x = range(1, len(acc) + 1)

plt.figure(figsize=(12, 5))

plt.subplot(1, 2, 1)

plt.plot(x, acc, 'b', label='Training accuracy')

if val == 1:

plt.plot(x, val_acc, 'r', label='Validation accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.title('Accuracy')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(x, loss, 'b', label='Training loss')

if val == 1:

plt.plot(x, val_loss, 'r', label='Validation loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.title('Loss')

plt.legend()

plot_history(history, val=1)

You can also use the following code without the plot_history function:

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.legend(['train','validation'])

plt.show()

15. Predict translations for the test set.

You can continue using your model or you can use the following code to load the trained model with 10 epochs. Remember that our chosen architecture for a deep learning encoder-decoder translation model is a very simple architecture.

# model = load_model('model.15Epochs')

preds = model.predict_classes(test_X.reshape((test_X.shape[0],test_X.shape[1])))

WARNING:tensorflow:From <ipython-input-34-4097ca6b98b5>:1: Sequential.predict_classes (from tensorflow.python.keras.engine.sequential) is deprecated and will be removed after 2021-01-01.

Instructions for updating:

Please use instead:* `np.argmax(model.predict(x), axis=-1)`, if your model does multi-class classification (e.g. if it uses a `softmax` last-layer activation).* `(model.predict(x) > 0.5).astype("int32")`, if your model does binary classification (e.g. if it uses a `sigmoid` last-layer activation).

preds

array([[ 6, 1044, 330, ..., 0, 0, 0],

[ 2, 184, 23, ..., 0, 0, 0],

[ 2, 416, 7, ..., 0, 0, 0],

...,

[ 4, 49, 365, ..., 0, 0, 0],

[ 120, 143, 486, ..., 0, 0, 0],

[ 3, 35, 19, ..., 0, 0, 0]], dtype=int64)

As you noticed preds are only indices of words, so we need to convert them to words to be able to read them.

16. Use the sequences_to_texts function to convert an index to a word on your predictions.

preds_text = eng_tokenizer.sequences_to_texts(preds)

# preds_text

17. Create a new dataframe with three columns where you show the input Dutch text of the test set, the actual output, and your predictions. Use the sample() function with your dataframe to randomly check some of the lines.

pred_df = pd.DataFrame({'input' : test[:, 1], 'actual output' : test[:,0], 'predicted output' : preds_text})

#pd.set_option('display.max_colwidth', 50)

pred_df.head(15)

| input | actual output | predicted output | |

|---|---|---|---|

| 0 | een aap beklimt een hoge boom | a monkey is climbing up a tall tree | a earthquake wearing a a a |

| 1 | de vredesonderhandelingen beginnen deze week | the peace talks begin this week | the children for week |

| 2 | het licht is groen | the light is green | the light is green |

| 3 | krijgt u genoeg zuurstof | are you getting enough oxygen | are you enough enough |

| 4 | zijn ze in leven | are they alive | are we in |

| 5 | veel mooie bloemen bloeien in de lente | many pretty flowers bloom in the spring | many flowers are made in are |

| 6 | onbevoegden zijn niet toegestaan op het terrein | no unauthorised persons will be permitted inside the perimeter | dont not not on the the |

| 7 | ik weet dat ik het erin heb gestopt | i know i packed it | i know i see it |

| 8 | het water stijgt | the water is rising | the ship is is |

| 9 | het is iets wat ze altijd wilden doen | its something they always wanted to do | its is something i can to do |

| 10 | ze is net twintig geworden | she has just turned twenty | she just just been |

| 11 | in de zomer draag ik shirts met korte mouwen | in the summer i wear shortsleeved shirts | i in i i in very |

| 12 | je kunt erop vertrouwen dat hij zijn woord zal houden | you can trust him to keep his word | you can know him to his his |

| 13 | zullen we lopen of met de auto gaan | shall we walk or drive | will we go to the the bus |

| 14 | laten we honkbal spelen | lets play baseball | lets play baseball |

pred_df

| actual | predicted | |

|---|---|---|

| 0 | a monkey is climbing up a tall tree | a earthquake wearing a a a |

| 1 | the peace talks begin this week | the children for week |

| 2 | the light is green | the light is green |

| 3 | are you getting enough oxygen | are you enough enough |

| 4 | are they alive | are we in |

| ... | ... | ... |

| 10990 | i like dogs | i love dogs |

| 10991 | i noticed you didnt finish your dinner | i didnt you you your your your |

| 10992 | tom didnt even know who mary was | tom didnt even know mary was was |

| 10993 | few people are able to understand his theories | some people decided people is are are are |

| 10994 | you can leave me alone | you can me me |

10995 rows × 2 columns

pred_df.sample(15)

| input | actual output | predicted output | |

|---|---|---|---|

| 4236 | wat heb je besloten | what have you decided | what did you |

| 3325 | hij keek neer op de vallei onder hem | he looked down at the valley below | he looked out out the the |

| 2855 | ik denk dat dat aan tom verteld moet worden | i think tom needs to be told that | i think tom should to to |

| 3365 | tom heeft een hekel aan klerken | tom hates clerks | tom hates hates |

| 916 | het werd in olie gebakken | that was cooked in oil | it was in in |

| 9413 | waarom zijn jullie getrouwd | why are you married | why are you married |

| 8726 | de politiemannen arresteerden de inbreker | the policemen arrested the burglar | the moon says the means |

| 3322 | ik zal terug zijn om 230 | ill be back at 230 | ill be back by |

| 6718 | ik kan me niet herinneren wat tom deed | i dont remember what tom did | i cant remember what did did |

| 10403 | zij stierven op het slachtveld | they died on the battlefield | she is on the south |

| 2019 | ik hou van wiskunde | i like math | i love math |

| 3495 | we hebben een half dozijn eieren | we have half a dozen eggs | we have a eggs eggs |

| 6171 | tom bezocht het rijksmuseum toen hij in amsterdam was | tom visited the national museum when he was in amsterdam | tom ran in when when he he in in |

| 2982 | goeiemorgen allemaal | good morning everybody | good are |

| 4825 | dat was nog maar het begin | that was only the beginning | it was just but |

Tatoeba.org (https://tatoeba.org/en/downloads) has a large database of example sentences translated into many languages by volunteers. To have a better data for your neural machine translator you can use this tool to generate and download customized sentence pairs. For example it has more than one million sentence pairs translated from Dutch to English. This time, try to tune the hyperparameters and add an attention layer after the dense layer.